Agile is (half) dead

Agile was built for uncertainty. It wasn’t built for stochastic collaborators.

We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value:

Individuals and interactions over processes and tools

Working software over comprehensive documentation

Customer collaboration over contract negotiation

Responding to change over following a plan

That is, while there is value in the items on the right, we value the items on the left more.

That’s the entire Agile Manifesto. For such a seminal piece of writing, I remember being surprised by its brevity the first time I read it!

Written at the beginning of the millennium, the Agile Manifesto pushed back against the “waterfall” method of engineering that had come to dominate the preceding decades. Waterfall, which had served traditional corporate mechanical and civil engineering so well, made no sense for software engineering in the dot-com era. In this world, engineers worked in teams of 6, not 60; solutions were fuzzy and poorly understood, rather than a variation on last year’s sedan; and new iterations could be shipped in weeks, not months.

The context had changed, and so the method had to as well.

But the context has changed again. Software is increasingly being written by AI, and that trend shows no sign of slowing.

Here are two broadly accepted truths about AI-assisted software development:

AI can generate new code and features in a shockingly short amount of time.

AI-generated code can devolve into a hellish mess of intractable spaghetti just as quickly.

So: does the Agile Manifesto still hold up?

I’d argue not.

In my experience, one of the most effective ways to stop AI from going completely off the rails is to co-create documentation with it upfront. Rather than prompting the AI to "just write some code," I usually begin by describing the problem space and asking it to draft user flows or architecture sketches in markdown. After a few iterations of back-and-forth refinement, we land on a shared understanding of what we're trying to build. Only then do I move to implementation. This upfront investment in alignment pays off dramatically in code quality and long-term maintainability.

Agile treated documentation as time consuming to write and rarely read in practice. But that logic has flipped: it’s fast to generate, easy for an AI consume, and essential for maintaining shared context. It captures intent, constraints, and architecture in a form that both human and machine can work from - and return to when things go off the rails.

AI shifts the developer’s primary interaction loop from teammates to tools. Prototypes are trivial to spin up; the hard part is keeping the system coherent. In this context, documentation isn't a time-sink or a dusty artefact, but an active alignment tool.

So: great documentation is now more valuable than an initial prototype, and a developer’s primary iteration is not with other developers, but with their tools. The first two lines of the Agile Manifesto haven’t reversed completely, but they’re no longer the compass they once were.

Agile is (half ) dead.

“But wait!” I hear you protest. “You’re missing the point of Agile! It’s in the name! The underlying message is one of iteration and learning.”

True. But that wasn’t what made Agile unique. It wasn’t even close to the first methodology to espouse those ideas. Kaizen and the Toyota Way in the ‘80s, PDSA (Plan Do Study Act) in the ‘50s, and Francis Bacon’s Scientific Method in the ‘10s (the 1610s that is) all make the same essential observation:

Ambiguity demands learning. Learning demands iteration.

Agile’s true innovation wasn’t iteration per se; it was recontextualising it for small teams, fuzzy goals, and rapid delivery. But in a world where those teams increasingly consist of humans plus machines, that context has shifted again.

So, if Agile is just the latest iteration on… iteration… what’s the next one?

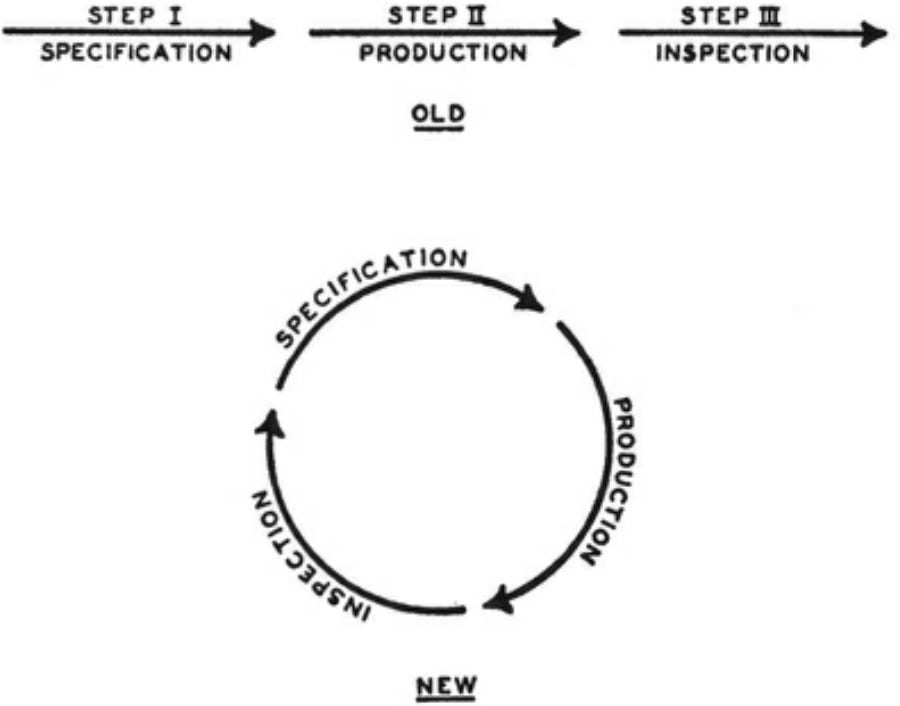

Let’s look at the grandfather of modern iteration methods: the Shewhart Cycle, devised by Dr. Walter Shewhart at Bell Labs in the late 1920s. Shewhart first described it in his 1939 book Statistical Method from the Viewpoint of Quality Control, explicitly citing Francis Bacon as an influence.

Shewhart outlined three steps of Specification, Production, and Inspection, conducted in an iterative cycle rather than the “old” linear process.

I like Shewhart’s model for its simplicity. But it doesn’t entirely fit today’s context.

Firstly, Produce and Inspect are innately tied to physical manufacturing. Replacing them with Implement and Validate takes cues from systems engineering and makes the model more broadly useful.

Implementation is self explanatory, but the use of Validation in this context may be a little more foreign to those unfamiliar with Systems Engineering. The IEEE’s definition works well here:

The assurance that a product, service, or system meets the needs of the customer and other identified stakeholders.

Put simply: “Did we build the right thing?”

This contrasts with Verification, another Systems Engineer term which asks: “Did we build the thing right?”

But even this updated version of the Shewhart Cycle falls short for AI Driven Development.

As we’ve seen, the key to making AI a productive collaborator is to build shared documentation together, and then review and refine that understanding until you're aligned and ready to move forward. That middle step, Alignment, is the critical addition. It’s where the magic happens (or doesn’t).

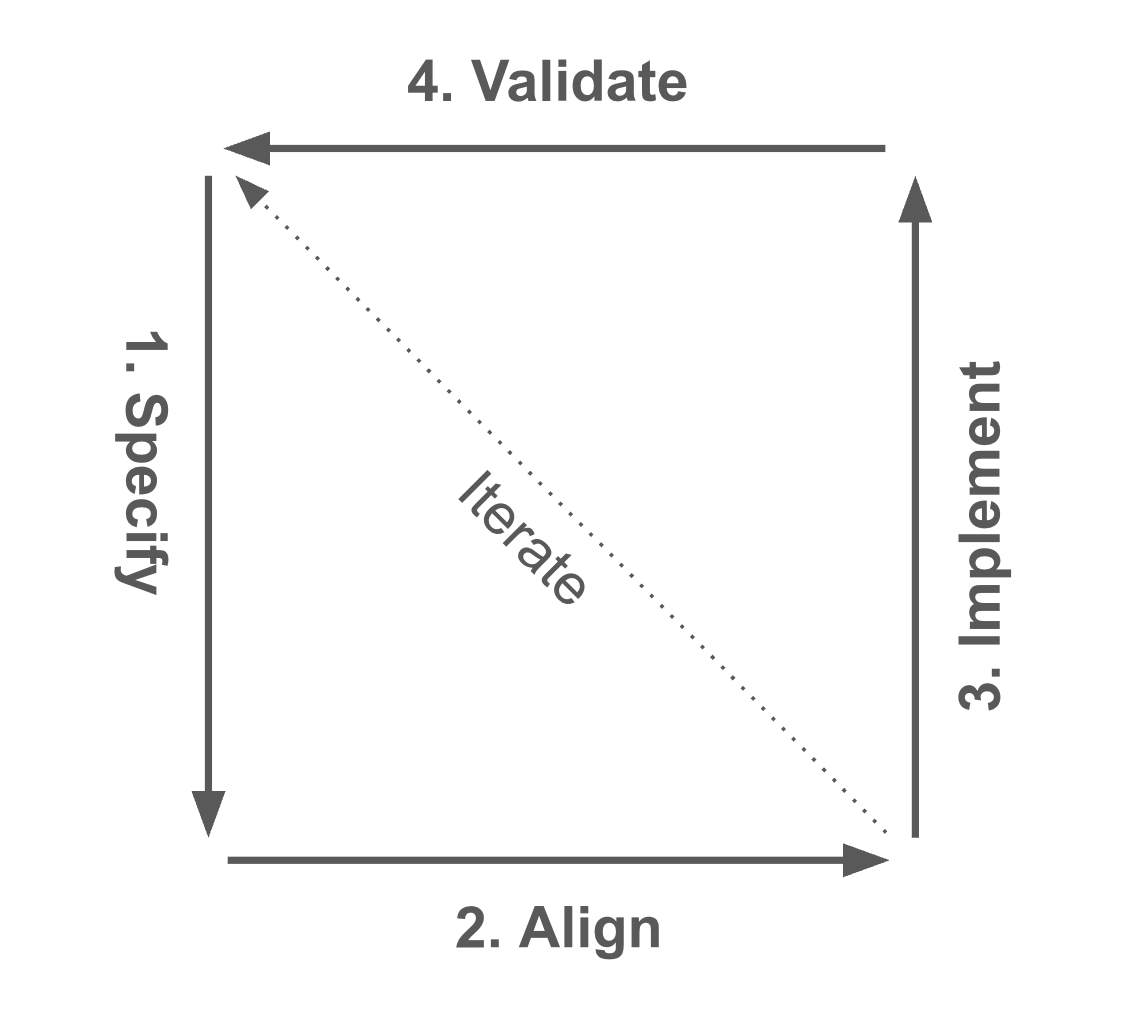

This gives us the SAIV cycle:

Specify → Align → Implement → Validate

(That “AI” sits at the heart of it is a happy coincidence.)

Here’s a visual sketch of how it works:

The dotted line illustrates an internal iteration loop between Specification and Alignment. You don’t just define a problem and let the AI run with it; you engage in a back-and-forth, refining the specs until there’s enough clarity and confidence to move forward. Only then do you implement.

With this in mind, it’s worth flagging that the SAIV cycle iterates over all four steps. This sounds obvious, but it’s extremely tempting to only Align on the first cycle, before falling back to a process that only loops through instructing the AI to update the Implementation. This is a trap; it allows the documentation, developer’s mental model and implementation to all diverge, ultimately leading to the problems originally identified with AI Driven development. Documentation may not need re-aligning every cycle, but a periodic check is important.

This is probably a good time to flag that the SAIV cycle isn’t intended to invalidate the principles of the Agile Manifesto. Indeed, as AI gobbles up more and more of the pure technical work, “Individuals” and “Interactions” will become increasingly to the work of humans, who are best placed to get out there and collect feedback from actual users and other stakeholders. That is, the role of the engineer is drifting closer to that of the product manager; deciding what matters, not just how to build it.

So, where to from here?

Hopefully the SAIV cycle offers a useful mental model for how AI can be integrated into modern engineering workflows. What’s missing so far is a more detailed articulation of how to do Alignment well, particularly through documentation. I have some thoughts on that too, also inspired by systems engineering, but that’s a topic for another day.

Stay tuned.